CDC Process Architecture

The Fabric CDC process aggregates LUI data updates in the MicroDB and publishes a CDC message to the CDC consumer about committed changes.

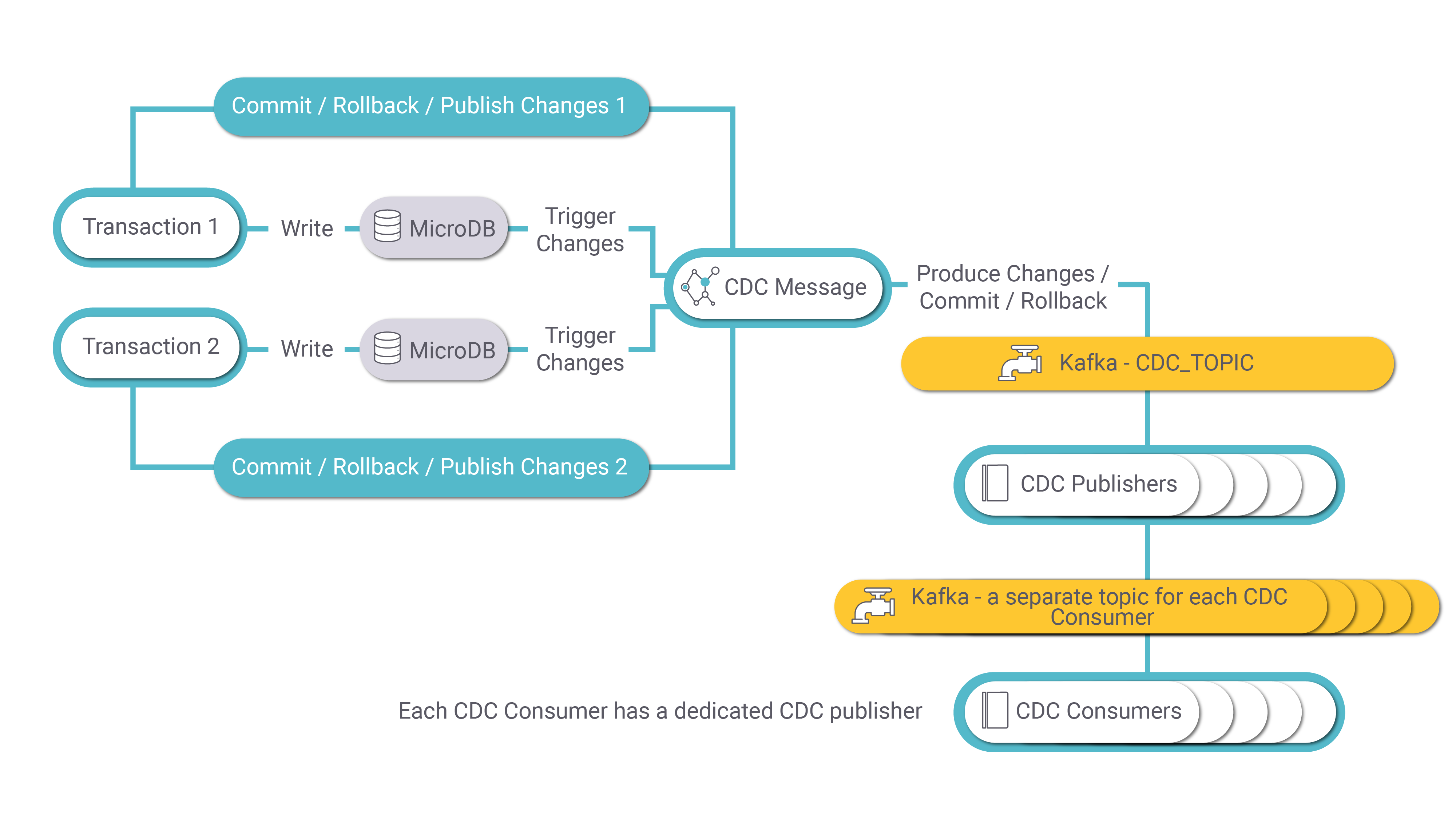

The following diagram describes the CDC process:

MicroDB Update

A transaction on an LUI may involve several updates in several of its LU tables. Each update (write) in the MicroDB SQLite file of the LUI activates a Fabric trigger that sends the change to the CDC Message. The CDC Message publishes a message to Kafka for each INSERT, UPDATE, or DELETE event in the MicroDB. Each message has the LUI (iid), event type, old and new values of each CDC column, PK columns of the LU table and transaction ID.

If the transaction is committed, a Commit message is sent by the CDC Message.

If the transaction is interrupted, rolled back or failed, a Rollback message is sent by the CDC Message.

CDC Message

The CDC Message publishes transaction messages to Kafka for each UPDATE, INSERT or DELETE activity. Kafka uses the CDC_TOPIC topic name for keeping transaction messages. The partition key is the LUI (iid).

The Data to Kafka is being produced asynchronously and parallelized for each MicroDB (LUI).

CDC Publisher

When the MicroDB is saved into Cassandra, the transaction's thread sends a Publish Acknowledge message to the Kafka CDC_TOPIC.

The CDC_TRANSACTION_PUBLISHER job consumes the transaction messages from Kafka and creates CDC messages for each transaction. Each CDC consumer has its own Kafka topic and its own CDC Publisher job. Note that Fabric concatenates the cluster id to each topic name if there are several Fabric clusters on one Cassandra cluster.

By default, Fabric starts one instance of a CDC Publisher job per CDC type. In case the Kafka topic has multiple partitions, it is recommended to start additional instances of the CDC Publisher jobs and start one CDC Publisher jobs instance per each Kafka partition. Kafka binds a partition to a job instance.

See an example of how to start a CDC Publisher job instance using the startjob Fabric command:

startjob CDC_TRANSACTION_PUBLISHER NAME='CDC_TRANSACTION_PUBLISHER' UID='PUBLISHER1' ARGS='{"consume_topic":"CDC_TOPIC","publish_topic":"Search","cdc_type":"Search","env":"_dev","group_id":"Search_gid", "max.poll.interval.ms":"100", "max.poll.records":"100", "session.timeout.ms:" "1000"} ';

A separate UID needs to be set on each job instance. The "publish_topic" attribute needs to be populated by the CDC type, and the "group_id" needs to be populated by a concatenation of the CDC type and "_gid".

Notes:

Each transaction can generate multiple CDC messages. For example, if an LUI sync inserts five records into an LU table, a separate CDC message is generated for each insert.

All CDC messages initiated by a given transaction have the same value in their trxId property.

Each CDC message has its own value in the msgNo property.

The msgCount property of each CDC message is populated by the number of CDC messages initiated by a transaction for a given CDC consumer.

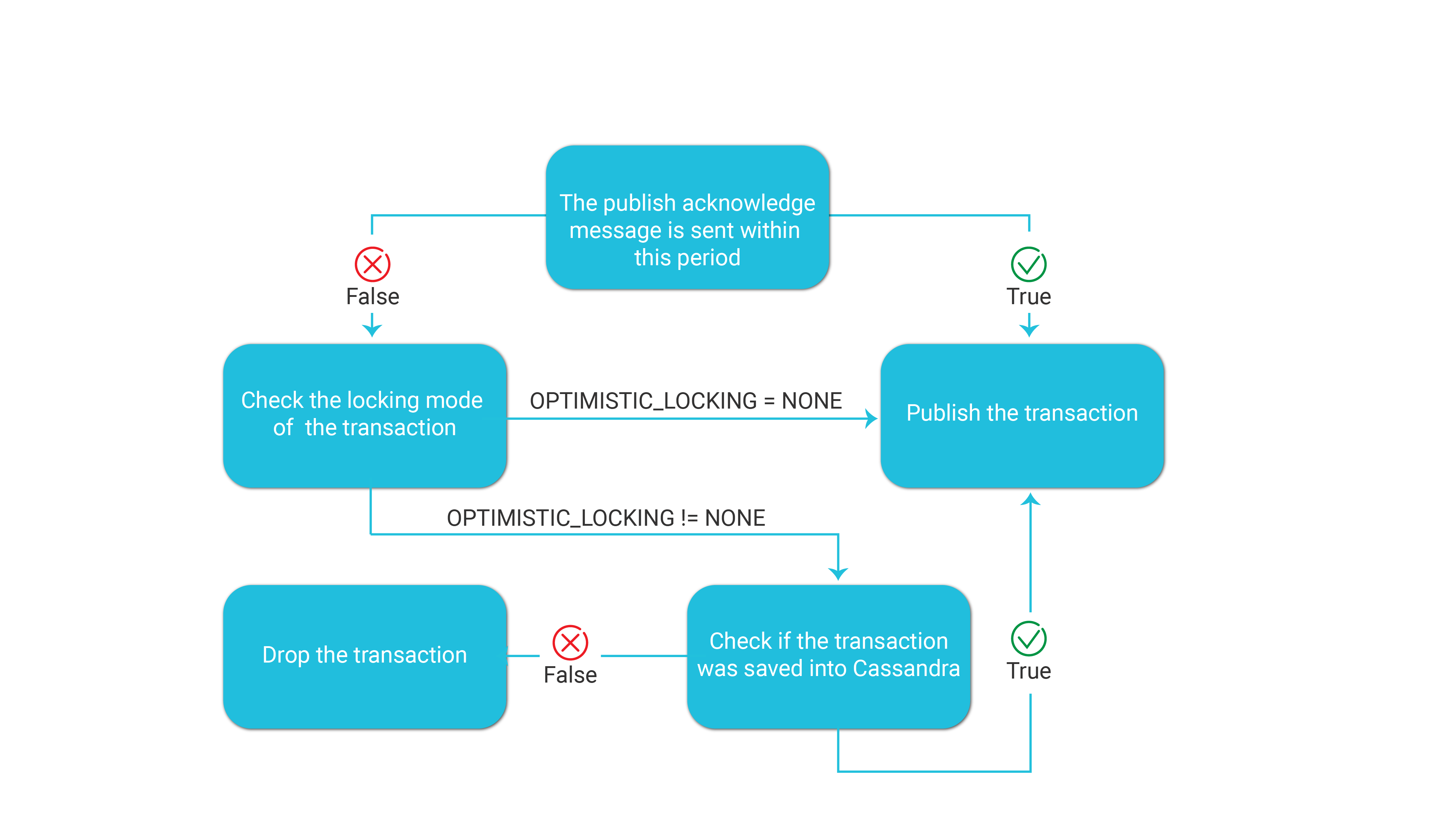

TRANSACTION_ACKNOWLEDGE_TIME_SEC Parameter

The Fabric config.ini file defines the following parameter which sets the maximum waiting time between the commit of the transaction and the Publish Acknowledge message which is sent when the transaction is successfully saved into Cassandra:

- TRANSACTION_ACKNOWLEDGE_TIME_SEC=60

The default value of this parameter is 60 seconds.

The following diagram displays how Fabric handles this parameter:

Note that the OPTIMISTIC_LOCKING parameter in the config.ini file can be set per node to support lightweight transactions between nodes when saving the LUIs into Cassandra.

CDC Consumer

The data is kept in Kafka with a separate topic for each Fabric's consumer.

Search Consumer

Fabric has a built-in integration with Elasticsearch. The search loader job starts automatically when deploying an LU with search indexes. The Jobs UID is Search. The CDC consumer job consumes the messages in the Kafka Search topic and feeds ElasticSeach with the MicroDB's changes to create search indexes in Elasticsearch. The search loader job works as follows:

- Data is consumed synchronously, ordered for each microdb instance’s changes.

- Data is transferred to ElasticSearch asynchronously for each change.

- Before UPDATE/DELETE changes the ElasticSearch index is refreshed to reflect the most available data state.

Each search loader job has a default of 5 concurrent threads for the INSERT changes, 1 thread for UPDATE changes, and 1 thread for the DELETE changes. Note that the number of concurrent threads for the INSERT changes is configurable (BULK_PROCESSOR_MAX_CONCURRENT_WORKERS config.ini parameter).

Click for more information about Fabric Search capabilities.

CDC Transaction Debug

The DEBUG_CDC_JOB Fabric job can be run as a CDC consumer to debug a CDC topic whereby it consumes the CDC messages of a given CDC topic and writes them to the log file.

Example:

startjob DEBUG_CDC_JOB name='DEBUG_CDC_JOB' ARGS='{"topic":Tableau", "group_id": "tableau"}';

CDC Process Architecture

The Fabric CDC process aggregates LUI data updates in the MicroDB and publishes a CDC message to the CDC consumer about committed changes.

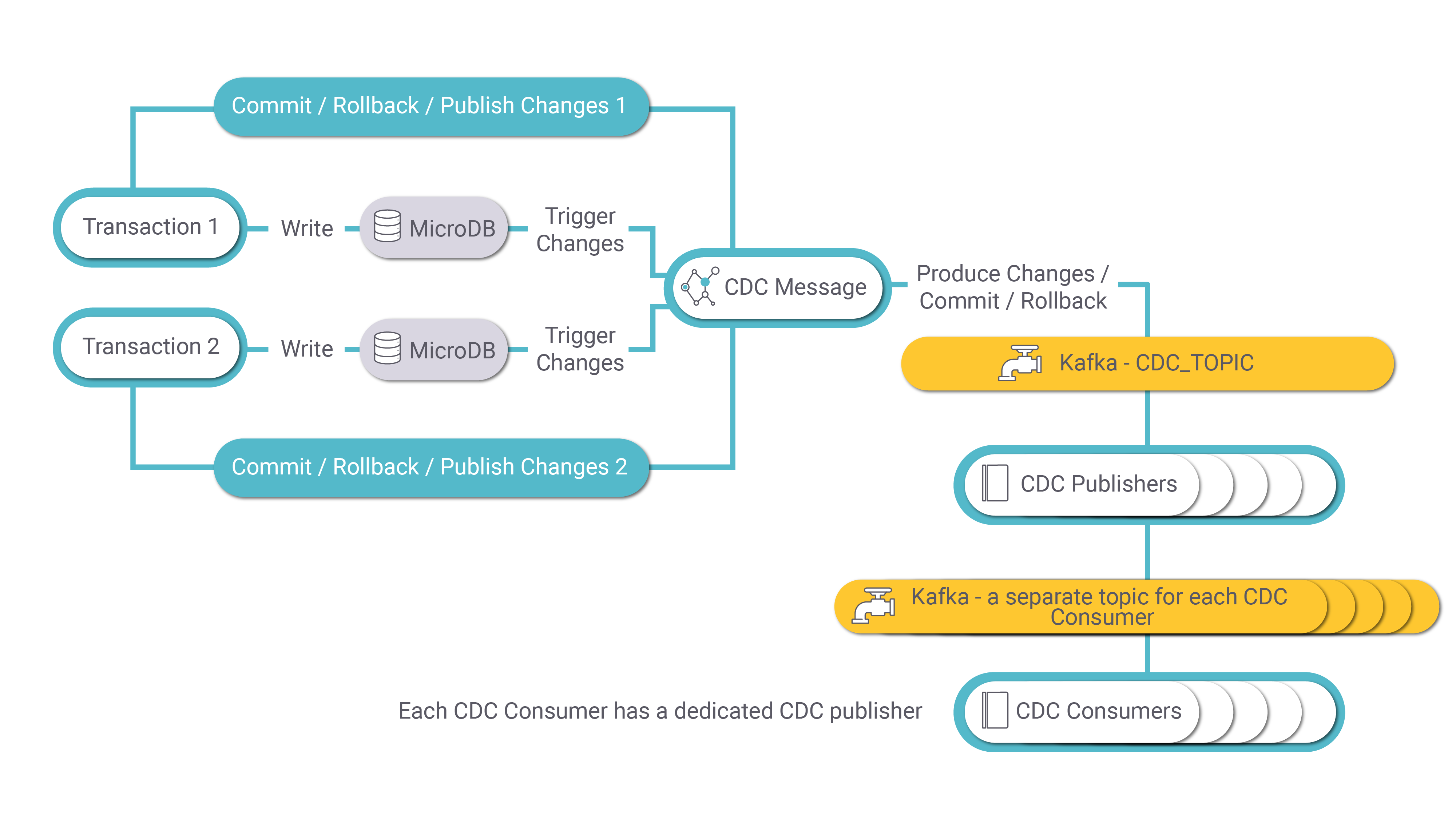

The following diagram describes the CDC process:

MicroDB Update

A transaction on an LUI may involve several updates in several of its LU tables. Each update (write) in the MicroDB SQLite file of the LUI activates a Fabric trigger that sends the change to the CDC Message. The CDC Message publishes a message to Kafka for each INSERT, UPDATE, or DELETE event in the MicroDB. Each message has the LUI (iid), event type, old and new values of each CDC column, PK columns of the LU table and transaction ID.

If the transaction is committed, a Commit message is sent by the CDC Message.

If the transaction is interrupted, rolled back or failed, a Rollback message is sent by the CDC Message.

CDC Message

The CDC Message publishes transaction messages to Kafka for each UPDATE, INSERT or DELETE activity. Kafka uses the CDC_TOPIC topic name for keeping transaction messages. The partition key is the LUI (iid).

The Data to Kafka is being produced asynchronously and parallelized for each MicroDB (LUI).

CDC Publisher

When the MicroDB is saved into Cassandra, the transaction's thread sends a Publish Acknowledge message to the Kafka CDC_TOPIC.

The CDC_TRANSACTION_PUBLISHER job consumes the transaction messages from Kafka and creates CDC messages for each transaction. Each CDC consumer has its own Kafka topic and its own CDC Publisher job. Note that Fabric concatenates the cluster id to each topic name if there are several Fabric clusters on one Cassandra cluster.

By default, Fabric starts one instance of a CDC Publisher job per CDC type. In case the Kafka topic has multiple partitions, it is recommended to start additional instances of the CDC Publisher jobs and start one CDC Publisher jobs instance per each Kafka partition. Kafka binds a partition to a job instance.

See an example of how to start a CDC Publisher job instance using the startjob Fabric command:

startjob CDC_TRANSACTION_PUBLISHER NAME='CDC_TRANSACTION_PUBLISHER' UID='PUBLISHER1' ARGS='{"consume_topic":"CDC_TOPIC","publish_topic":"Search","cdc_type":"Search","env":"_dev","group_id":"Search_gid", "max.poll.interval.ms":"100", "max.poll.records":"100", "session.timeout.ms:" "1000"} ';

A separate UID needs to be set on each job instance. The "publish_topic" attribute needs to be populated by the CDC type, and the "group_id" needs to be populated by a concatenation of the CDC type and "_gid".

Notes:

Each transaction can generate multiple CDC messages. For example, if an LUI sync inserts five records into an LU table, a separate CDC message is generated for each insert.

All CDC messages initiated by a given transaction have the same value in their trxId property.

Each CDC message has its own value in the msgNo property.

The msgCount property of each CDC message is populated by the number of CDC messages initiated by a transaction for a given CDC consumer.

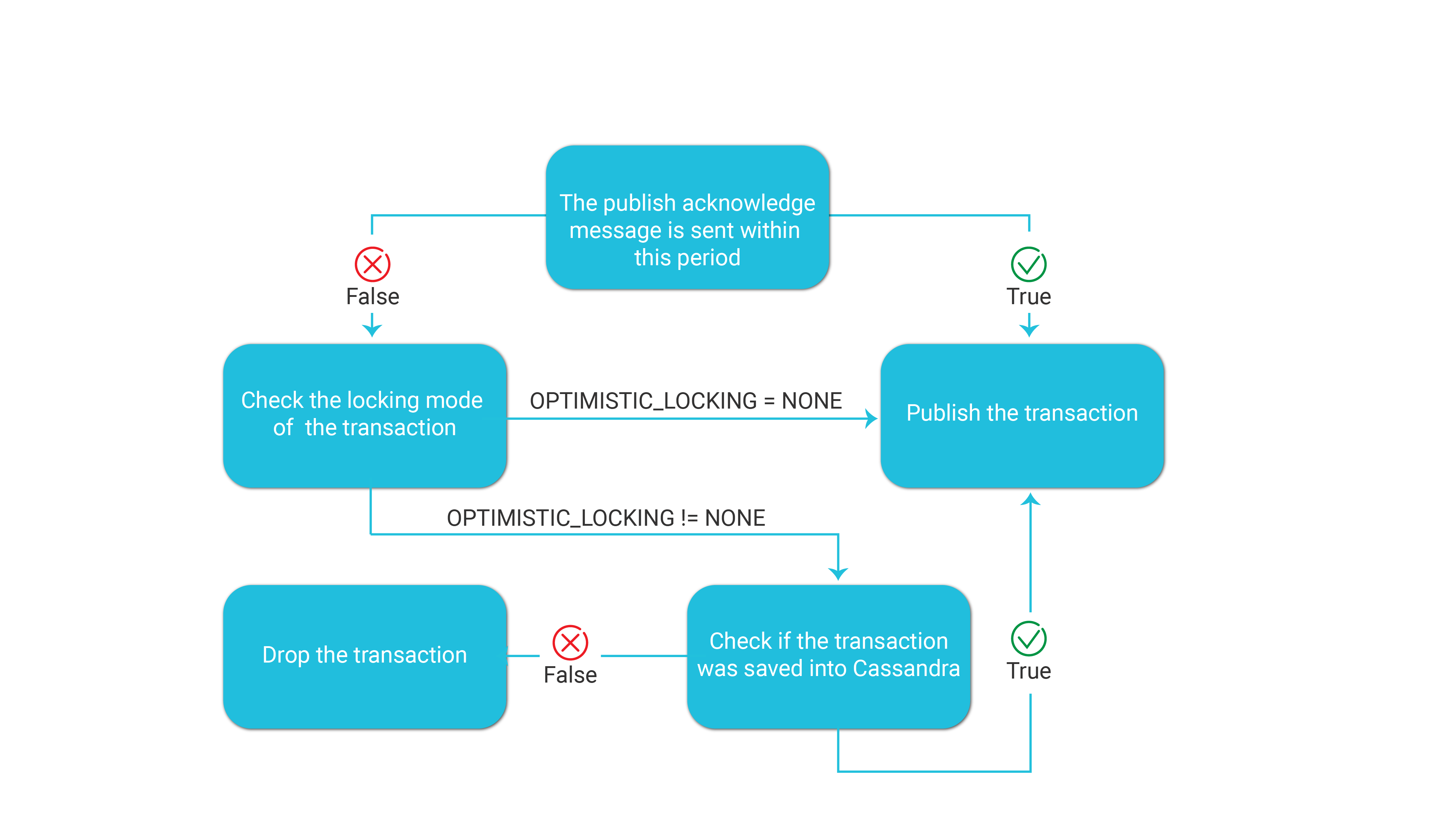

TRANSACTION_ACKNOWLEDGE_TIME_SEC Parameter

The Fabric config.ini file defines the following parameter which sets the maximum waiting time between the commit of the transaction and the Publish Acknowledge message which is sent when the transaction is successfully saved into Cassandra:

- TRANSACTION_ACKNOWLEDGE_TIME_SEC=60

The default value of this parameter is 60 seconds.

The following diagram displays how Fabric handles this parameter:

Note that the OPTIMISTIC_LOCKING parameter in the config.ini file can be set per node to support lightweight transactions between nodes when saving the LUIs into Cassandra.

CDC Consumer

The data is kept in Kafka with a separate topic for each Fabric's consumer.

Search Consumer

Fabric has a built-in integration with Elasticsearch. The search loader job starts automatically when deploying an LU with search indexes. The Jobs UID is Search. The CDC consumer job consumes the messages in the Kafka Search topic and feeds ElasticSeach with the MicroDB's changes to create search indexes in Elasticsearch. The search loader job works as follows:

- Data is consumed synchronously, ordered for each microdb instance’s changes.

- Data is transferred to ElasticSearch asynchronously for each change.

- Before UPDATE/DELETE changes the ElasticSearch index is refreshed to reflect the most available data state.

Each search loader job has a default of 5 concurrent threads for the INSERT changes, 1 thread for UPDATE changes, and 1 thread for the DELETE changes. Note that the number of concurrent threads for the INSERT changes is configurable (BULK_PROCESSOR_MAX_CONCURRENT_WORKERS config.ini parameter).

Click for more information about Fabric Search capabilities.

CDC Transaction Debug

The DEBUG_CDC_JOB Fabric job can be run as a CDC consumer to debug a CDC topic whereby it consumes the CDC messages of a given CDC topic and writes them to the log file.

Example:

startjob DEBUG_CDC_JOB name='DEBUG_CDC_JOB' ARGS='{"topic":Tableau", "group_id": "tableau"}';